The concepts associated with high definition

(HD) video

can be confusing to those of you unfamiliar with video

camera function. If you are a beginning filmmaker, terms like scan lines,

SD, HD, and

4k technology, will certainly make your head spin!

Fear not, for the concepts are

surprisingly straightforward. In this lesson, we will cover the basics of high definition

video and provide you with a working

understanding of the terminology. In addition, we will look at 4k technology, also known

as ultra HD. This technology is used by the groundbreaking

Red One camera, introduced by the Red Digital Cinema Company in 2007.

To understand high definition video, we must start at the beginning and

examine how

images are recorded by a video camera.

Recording

As mentioned in the previous lesson, there are three mediums used to record video images:

- magnetic tape

- removable memory card

- hard drive

The preferred medium for cameras (and audio recorders) is removable memory card. Initially it was expected to be hard drive, but removable cards proved to be simpler and more portable. Today, hard drives are primarily used for editing, while magnetic tape is preferred for archiving.

Although the hardware has moved from analog to digital technology since the inception of video recording, the principles are essentially the same. It's based on electromagnetism.

The easiest way to illustrate the principles of electromagnetism is with magnetic tape. The recording head is essentially an

electromagnet, which is activated by an electrical signal from the image processor.

As the videotape travels over the head, the iron particles in the tape are

magnetized. This is, in essence, the recorded image.

Scan Lines

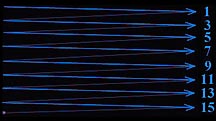

The video image is recorded one horizontal line at a time.

These lines are called scan lines and the process is known as

scanning. If you look closely at a TV screen you will see the scan

lines. You probably can't see them on your computer monitor because the lines

are narrower than on a TV.

SCAN LINES

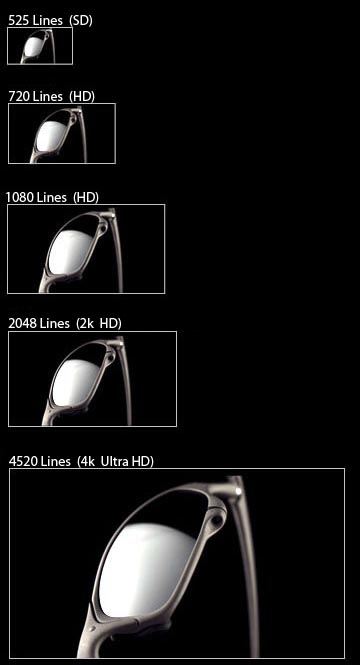

Standard Definition (SD)

The term "definition" basically means the

visible detail in the video image. It is measured by the number of

horizontal scan lines in a single frame. In the United States and Japan,

standard definition video is 525 lines. In most European countries, standard

definition is 625 lines. (The former is known as NTSC; the latter is

PAL).

High Definition (HD)

Although much hype has been made about HD, the

concept itself is simple to understand. Technically, anything that breaks the PAL barrier

of 625 lines can be called high definition. The most common HD formats

feature 720 and 1080 scan lines.

Ultra High Definition

Ultra high definition features an amazing 4,520 lines of horizontal

resolution. Known as "4k" technology because the scan lines

exceed 4,000, it will no doubt be the future industry standard.

The following photos show the relative size of the

different formats. The first one represents the typical digital

video frame (DV

and DVCAM).

Notice how detail improves as the number of scan

lines increases. The final photo illustrates the huge leap in image

detail 4k technology provides.

As a point of reference, the typical flat computer

monitor has 2,000 lines of resolution. 35mm film--as perceived by the human

eye--falls in the mid HD range. For more on 35mm comparisons, please see our sample

lesson: HD vs. 35mm.

4k technology is based

on the proprietary 12 megapixel chip developed by the Red Digital Cinema

Company. Their affordable Red One camera can

shoot at all popular scan rates, including those shown above. 4k technology may prove to be the death knell for 35mm film.